A “gold standard” for computational materials science codes

Advertisement

Physicists and materials scientists can choose from a whole family of computer codes that simulate the behavior of materials and predict their properties. The accuracy of the results obtained by these codes depends on the employed approximations and chosen numerical parameters. To verify that the results from different codes are comparable, consistent with each other, and reproducible, a large group of scientists did the most comprehensive verification study so far. Published in the first 2024 issue of Nature Reviews Physics, it provides a reference dataset and a set of guidelines for assessing and improving existing and future codes.

Artistic representation of the equations of state of the periodic table elements. The ten different crystal structure simulated for each of the 96 studied elements are depicted on the table. The illustration is on the cover of the January 2024 issue of Nature Reviews Physics.

Giovanni Pizzi, EPFL

The computer codes used by physicists and materials scientists around the world are the basis of tens of thousands of scientific articles annually. These codes typically are based on density-functional theory (DFT), a modelling method that uses several approximations to reduce the otherwise mind-boggling complexity of calculating the behavior of electrons according to the laws of quantum mechanics. The differences between the results obtained with various codes come down to the numerical approximations being made, and the choice of the numerical parameters behind those approximations, often tailored to study specific classes of materials, or to calculate properties that are key for specific applications – say, conductivity for potential battery materials.

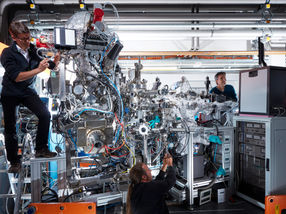

In the recently published Nature Reviews Physics article, the authors introduce the most comprehensive verification effort so far on solid-state DFT codes and provide their colleagues with the tools and a set of guidelines for assessing and improving existing and future codes. The project was led and coordinated by the National Centre for Computational Design and Discovery of Novel Materials (MARVEL) at the École Polytechnique Fédérale de Lausanne (EPFL, Switzerland). Several major contributions came from Prof. Thomas D. Kühne, Director of the Center for Advanced Systems Understanding (CASUS) – an institute of the Helmholtz-Zentrum Dresden-Rossendorf – and its Scientific Manager Dr. Hossein Mirhosseini as part of a developer team working with CP2K: an open-source software package that can perform atomistic simulations of solid state, liquid, molecular, material, crystal, and biological systems.

More elements, more crystal structures

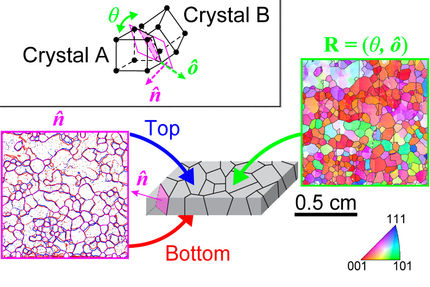

The published work builds on a Science article from 2016 that focused on a comparison of 40 computational approaches by using each one of them to calculate the energies of a test set of 71 crystals, each one corresponding to an element on the periodic table. The authors concluded that the mainstream codes were in very good agreement with each other. “That work was reassuring, but it did not really explore enough chemical diversity,” says Dr. Giovanni Pizzi, leader of the Materials Software and Data Group at the Paul Scherrer Institute PSI in Villigen (Switzerland), and corresponding author of the new paper. “In this study we considered 96 elements, and for each of them we simulated ten possible crystal structures.” In particular, for each of the first 96 elements of the periodic table, four different unaries and six different oxides were studied. Unaries are crystals made only with atoms of the element itself, oxides also include oxygen atoms. The result is a dataset of 960 materials and their properties, calculated by two independent all-electron codes, FLEUR and WIEN2k, which consider explicitly all the electrons in the atoms under consideration.

That dataset can now be used by anyone as a benchmark to test the precision of other codes, in particular those based on pseudopotentials where, unlike in all-electron codes, the electrons that do not participate in chemical bonds are treated in a simplified way to make the computation lighter. The authors benchmarked the results of nine such codes against those obtained by all-electron codes. “Together with other CP2K team members we at CASUS were responsible for the development of workflows and standard computation protocols, as well as for performing calculations with two pseudopotential methods implemented in the CP2K software, namely Quickstep and SIRIUS,” says Mirhosseini. “That means that we benchmarked the equation of states of all 960 crystals calculated with the two methods against the all-electron reference results. Our results could provide a basis for improving the implemented methods in CP2K,” he adds.

The study also includes a series of recommendations for users of DFT codes, to make sure that computational studies are reproducible, on how to use the reference dataset to conduct future verification studies, and on how to expand it to include other families of codes and other materials properties. “We hope our dataset will be a reference for the field for years to come,” says Pizzi.

Expand dataset, train researchers

After having shown the importance of the reference dataset, a major aim is now to expand it with more structures and more complex properties calculated with more advanced functionals. But there are even more challenges to tackle: Instead of exclusively focusing on how accurate the different codes are, the team also plans to take into account how expensive they are in terms of time and computational power. This would help fellow scientists to find the most cost-efficient way to do their calculations.

Alongside these developments an even larger consortium has already been formed. However, it is not primarily concerned with molecules or code. It targets the next generation of researchers. “Doctoral students and early-career postdocs need to be made aware of the importance of validating and evaluating DFT modeling results,” says Kühne. “Hence, the consortium is going to boost the skills of these young researchers so that they are able to implement code verification processes in their research projects. The benefits are obvious and include improved accuracy in general as well as computational cost optimized according to the desired precision.”