Light-carrying chips advance machine learning

In the digital age, data traffic is growing at an exponential rate. The demands on computing power for applications in artificial intelligence such as pattern and speech recognition in particular, or for self-driving vehicles, often exceeds the capacities of conventional computer processors. Working together with an international team, researchers at the University of Münster are developing new approaches and process architectures which can cope with these tasks extremely efficient. They have now shown that so-called photonic processors, with which data is processed by means of light, can process information much more rapidly and in parallel - something electronic chips are incapable of doing. The results have been published in the “Nature” journal.

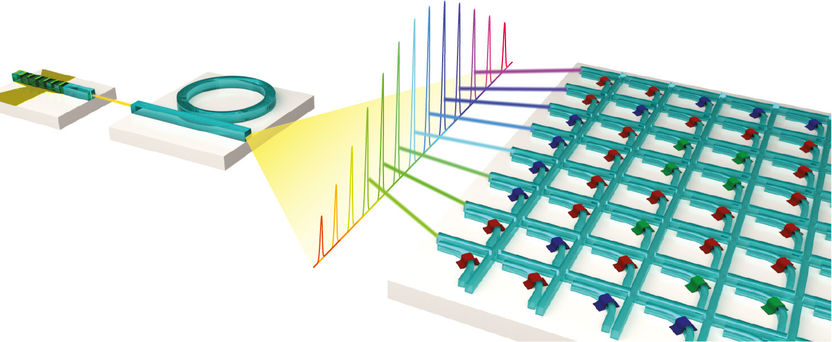

Schematic representation of a processor for matrix multiplications which runs on light. Together with an optical frequency comb, the waveguide crossbar array permits highly parallel data processing.

Copyright: WWU/AG Pernice

Background and methodology

Light-based processors for speeding up tasks in the field of machine learning enable complex mathematical tasks to be processed at enormously fast speeds (10¹² -10¹⁵ operations per second). Conventional chips such as graphic cards or specialized hardware like Google’s TPU (Tensor Processing Unit) are based on electronic data transfer and are much slower. The team of researchers led by Prof. Wolfram Pernice from the Institute of Physics and the Center for Soft Nanoscience at the University of Münster implemented a hardware accelerator for so-called matrix multiplications, which represent the main processing load in the computation of neural networks. Neural networks are a series of algorithms which simulate the human brain. This is helpful, for example, for classifying objects in images and for speech recognition.

The researchers combined the photonic structures with phase-change materials (PCMs) as energy-efficient storage elements. PCMs are usually used with DVDs or BluRay discs in optical data storage. In the new processor this makes it possible to store and preserve the matrix elements without the need for an energy supply. To carry out matrix multiplications on multiple data sets in parallel, the Münster physicists used a chip-based frequency comb as a light source. A frequency comb provides a variety of optical wavelengths which are processed independently of one another in the same photonic chip. As a result, this enables highly parallel data processing by calculating on all wavelengths simultaneously – also known as wavelength multiplexing. “Our study is the first one to apply frequency combs in the field of artificially neural networks,” says Wolfram Pernice.

In the experiment the physicists used a so-called convolutional neural network for the recognition of handwritten numbers. These networks are a concept in the field of machine learning inspired by biological processes. They are used primarily in the processing of image or audio data, as they currently achieve the highest accuracies of classification. “The convolutional operation between input data and one or more filters – which can be a highlighting of edges in a photo, for example – can be transferred very well to our matrix architecture,” explains Johannes Feldmann, the lead author of the study. “Exploiting light for signal transference enables the processor to perform parallel data processing through wavelength multiplexing, which leads to a higher computing density and many matrix multiplications being carried out in just one timestep. In contrast to traditional electronics, which usually work in the low GHz range, optical modulation speeds can be achieved with speeds up to the 50 to 100 GHz range.” This means that the process permits data rates and computing densities, i.e. operations per area of processor, never previously attained.

The results have a wide range of applications. In the field of artificial intelligence, for example, more data can be processed simultaneously while saving energy. The use of larger neural networks allows more accurate, and hitherto unattainable, forecasts and more precise data analysis. For example, photonic processors support the evaluation of large quantities of data in medical diagnoses, for instance in high-resolution 3D data produced in special imaging methods. Further applications are in the fields of self-driving vehicles, which depend on fast, rapid evaluation of sensor data, and of IT infrastructures such as cloud computing which provide storage space, computing power or applications software.

Original publication

Johannes Feldmann, Nathan Youngblood, Maxim Karpov, Helge Gehring, Xuan Li, Maik Stappers, Manuel Le Gallo, Xin Fu, Anton Lukashchuk, Arslan Raja, Junqiu Liu, David Wright, Abu Sebastian, Tobias Kippenberg, Wolfram Pernice, and Harish Bhaskaran; "Parallel convolution processing using an integrated photonic tensor core"; Nature; 2021

Original publication

Johannes Feldmann, Nathan Youngblood, Maxim Karpov, Helge Gehring, Xuan Li, Maik Stappers, Manuel Le Gallo, Xin Fu, Anton Lukashchuk, Arslan Raja, Junqiu Liu, David Wright, Abu Sebastian, Tobias Kippenberg, Wolfram Pernice, and Harish Bhaskaran; "Parallel convolution processing using an integrated photonic tensor core"; Nature; 2021

Topics

Organizations

Other news from the department science

Get the chemical industry in your inbox

By submitting this form you agree that LUMITOS AG will send you the newsletter(s) selected above by email. Your data will not be passed on to third parties. Your data will be stored and processed in accordance with our data protection regulations. LUMITOS may contact you by email for the purpose of advertising or market and opinion surveys. You can revoke your consent at any time without giving reasons to LUMITOS AG, Ernst-Augustin-Str. 2, 12489 Berlin, Germany or by e-mail at revoke@lumitos.com with effect for the future. In addition, each email contains a link to unsubscribe from the corresponding newsletter.

Most read news

More news from our other portals

Last viewed contents

Picture_archiving_and_communication_system

1,2,4-Butanetriol_trinitrate

European_Pharmaceutical_Union

Mycolic_acid