Trust in AI but not blindly

Artificial intelligence is sometimes met with scepticism but it has earned our trust

A research team at TU Darmstadt headed by Professor Kristian Kersting describes how to achieve this using a clever approach to interactive learning in the magazine “Nature Machine Intelligence”.

Symbolic image

geralt, pixabay.com

Imagine the following situation: A company wants to teach an artificial intelligence (AI) to recognise a horse on photos. To this end, it uses several thousand images of horses to train the AI until it is able to reliably identify the animal even on unknown images. The AI learns quickly – it is not clear to the company how it is making its decisions but this is not really an issue for the company. It is simply impressed by how reliably the process works. However, a suspicious person then discovers that copyright information with a link to a website on horses is printed in the bottom right corner of the photos. The AI has made things relatively easy for itself and has learnt to recognise the horse based only on this copyright notice. Researchers talk in these cases about confounders – which are confounding factors that should actually have nothing to do with the identification process. In these cases, the process will work as long as the AI continues to receive other comparable photos. If the copyright notice is missing, the AI is left high and dry.

Various studies carried out over the last few years have studied and shown how to uncover this undesired decision making of AI systems, even when using very large datasets for training the system. They have become known as Clever Hans moments of AI – named after a horse who at the beginning of the last century was supposed to be able to solve simple arithmetic sums but was in fact only able to find the right answer by “reading” the body language of the questioner. “AI with a Clever Hans moment learns to draw the right conclusions for the wrong reasons”, says Kristian Kersting, Professor of Artificial Intelligence and Machine Learning in the Department of Computer Science at TU Darmstadt and a member of its Centre for Cognitive Science. This is a problem that all AI researchers are potentially confronted with and makes clear why the call for “explainable AI” has become louder in recent years.

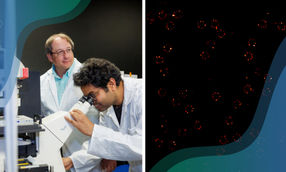

Kersting understands the consequences of this problem: “Eliminating Clever Hans moments is one of the most important steps towards a practical application and dissemination of AI, in particular in scientific and in safety-critical areas.” The researcher and his team have already been developing AI solutions for a number of years that can determine the resistance of a plant to parasites or detect an infestation at an early stage – even before it can be perceived by the human eye. However, the prerequisite for the success of such an application is that the AI system is right for the right scientific reasons so that the domain experts actually trust the AI. If it is not possible to generate trust, the experts will turn away from the AI – and will thus miss the opportunity to use AI to create resistant plants in times of global warming.

Nevertheless, trust must be earned. In a recent paper published in the magazine “Nature Machine Intelligence”, the researcher and his team demonstrate how trust in AI can be improved using interactive learning. This means integrating experts into the learning process. These experts need to be able to understand what it is that the AI is actually doing. More precisely, the AI system has to supply information on its active learning process – e.g. what information it has taken from a training set such as the image of a horse and an explanation of how it is able to make a prediction based on this information. People with expert knowledge can then check both of these things. If the prediction is fundamentally wrong, the AI can learn new rules, but if the prediction and the explanation are right, the experts do not need to do anything. However, the experts are left with a problem if the prediction is right but the explanation is wrong: How do you teach an AI that the explanation is wrong?

Together with colleagues from the University of Bonn, the University of Göttingen, the University of Trento, and the company LemnaTec, the team has now developed a novel approach for this purpose that is called “explanatory interactive learning” (XIL). In simple terms, it adds the expert into the training loop such that she interactively revises the AI system via providing feedback on its explanations. In the example described above, the AI would indicate that it considers the copyright information to be relevant. The expert will simply say “no, this is not right”. As a result, the AI will then pay less and less attention to this information in the future.

Supported by the Federal Ministry of Food and Agriculture (BMEL), the research team at TU Darmstadt tested XIL using a dataset for the Cercospora leaf spot disease, a harmful leaf disease in sugar beet that is found all over the world. The AI – a Convolutional Neural Network (CNN) – initially learnt to concentrate on areas of the hyperspectral data that could not be relevant for identifying the pathogen according to plant experts – despite the fact that the predictions where highly accurate. Following a correction stage using explanatory interactive learning (XIL), the detection rate felt only slightly but the AI drew the right conclusions for the right reasons. The experts are able to work with this type of AI. Conversely, the AI may learn at a slower pace but it will deliver more reliable predictions in the long run.

“Interaction and understandability are thus crucially important for building trust in AI systems that learn from data”, says Kersting. Surprisingly, the links between interaction, explanation and building trust have largely been ignored in research – until now.

Original publication

Patrick Schramowski, Wolfgang Stammer, Stefano Teso, Anna Brugger, Franziska Herbert, Xiaoting Shao, Hans-Georg Luigs, Anne-Katrin Mahlein & Kristian Kersting; "Making deep neural networks right for the right scientific reasons by interacting with their explanations"; Nature Machine Intelligence; 2, 476-486 (2020).